Abstract

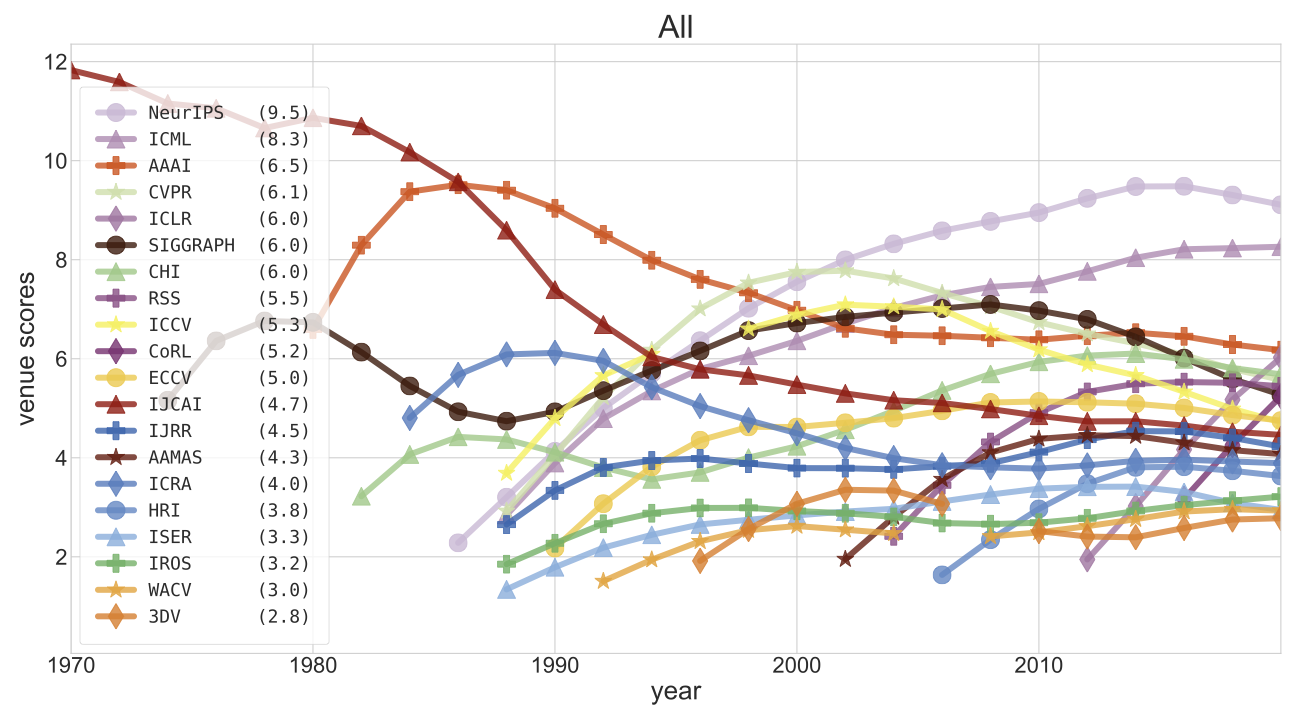

We present a method for automatically organizing and evaluating the quality of different publishing venues in Computer Science. Since this method only requires paper publication data as its input, we can demonstrate our method on a large portion of the DBLP dataset, spanning 50 years, with millions of authors and thousands of publishing venues. By formulating venue authorship as a regression problem and targeting metrics of interest, we obtain venue scores for every conference and journal in our dataset. The obtained scores can also provide a per-year model of conference quality, showing how fields develop and change over time. Additionally, these venue scores can be used to evaluate individual academic authors and academic institutions. We show that using venue scores to evaluate both authors and institutions produces quantitative measures that are comparable to approaches using citations or peer assessment. In contrast to many other existing evaluation metrics, our use of large-scale, openly available data enables this approach to be repeatable and transparent.

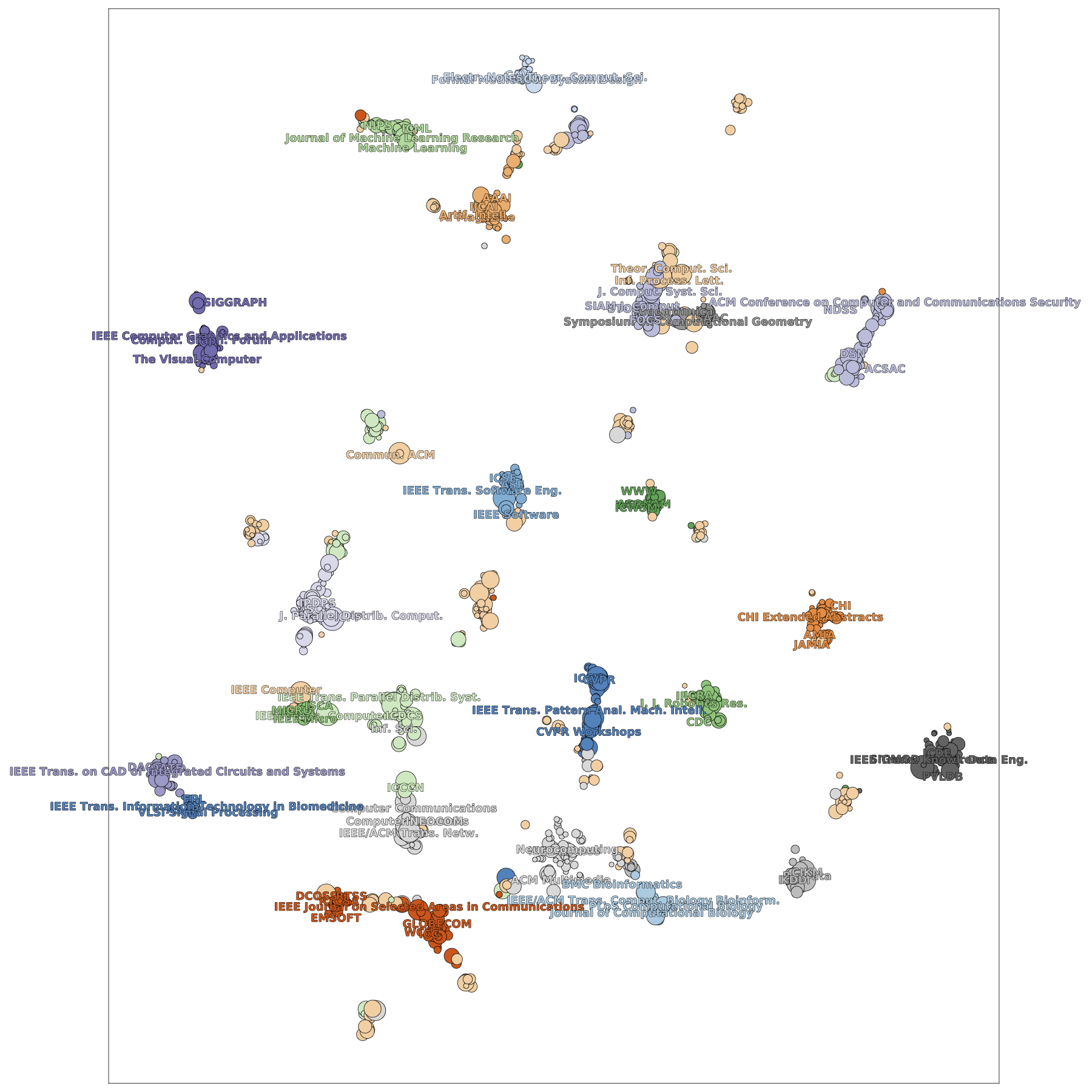

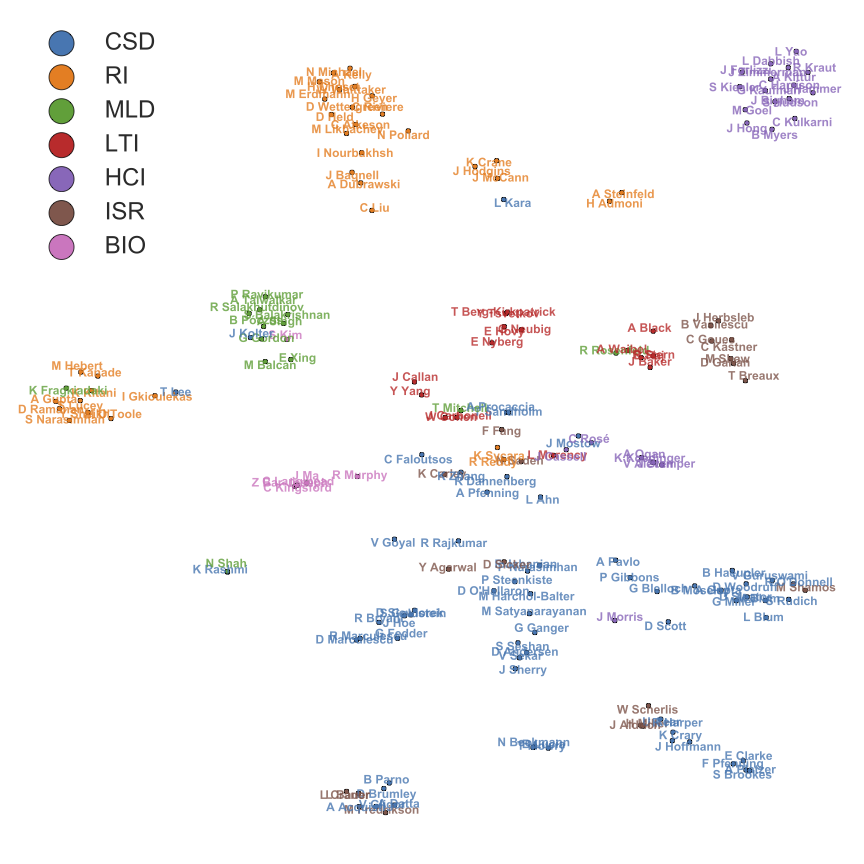

Visualizations

Overview of Computer Science: a t-SNE embedding of the major publishing venues.

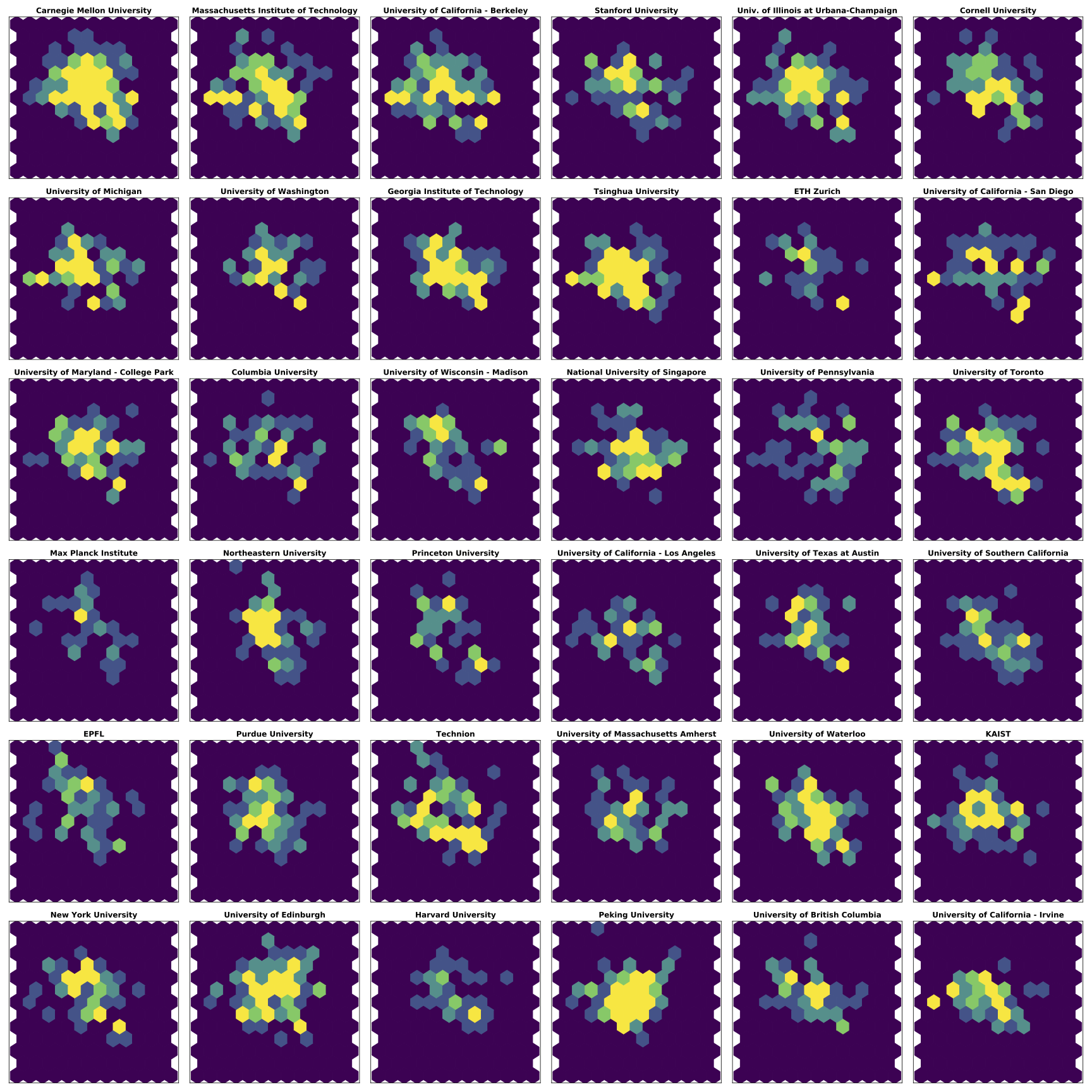

Embedding of Faculty and Heatmap of University CS departments

Paper